AI

Agent

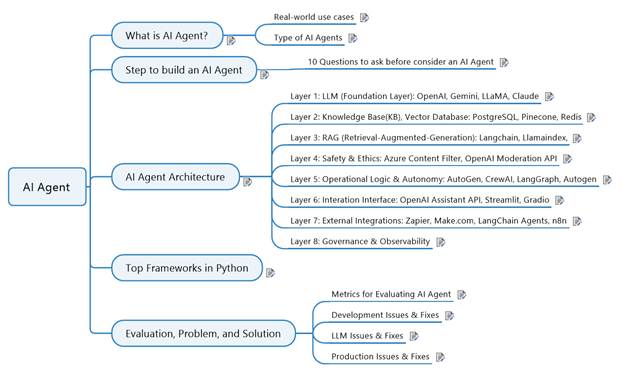

1 What is AI Agent?........................................................................................................................ 2

1.1 Real-world use cases............................................................................................................. 2

1.2 Type of AI Agents.................................................................................................................. 3

2 Step to build an AI Agent............................................................................................................. 4

2.1 10 Questions to ask before

consider an AI Agent................................................................. 5

3 AI Agent Architecture.................................................................................................................. 6

3.1 Layer 1: LLM (Foundation Layer):

OpenAI, Gemini, LLaMA, Claude.............................. 6

3.2 Layer 2: Knowledge Base(KB),

Vector Database: PostgreSQL, Pinecone, Redis............... 7

3.3 Layer 3: RAG

(Retrieval-Augmented-Generation): Langchain, Llamaindex,..................... 7

3.4 Layer 4: Safety & Ethics:

Azure Content Filter, OpenAI Moderation API.......................... 7

3.5 Layer 5: Operational Logic &

Autonomy: AutoGen, CrewAI, LangGraph, Autogen.......... 7

3.6 Layer 6: Interation Interface:

OpenAI Assistant API, Streamlit, Gradio.............................. 7

3.7 Layer 7: External Integrations:

Zapier, Make.com, LangChain Agents, n8n....................... 8

3.8 Layer 8: Governance &

Observability.................................................................................. 8

4 Top Frameworks in Python.......................................................................................................... 8

5 Evaluation, Problem, and Solution............................................................................................... 9

5.1 Metrics for Evaluating AI Agent........................................................................................... 9

5.2 Development Issues & Fixes............................................................................................... 10

5.3 LLM Issues & Fixes............................................................................................................ 10

5.4 Production Issues & Fixes................................................................................................... 11

An AI agent is a system

that can perceive its environment, process information, make decisions, and

take actions to achieve a specific goal. Think of it as an autonomous entity

that can perform tasks on your behalf.

-

Clinical History Search Engine

-

Predictive Maintenance Agent

-

Protocol Summarizer

-

IOQ/IOK Documents Extraction

-

Route Optimization System

-

Marketing Campaign Agent

-

SOAP Notes Generator

-

Inventory Management Assistant

-

Anti-Fraud Agent

AI Agent Types Comparison Table

|

Agent Type |

Core Capability |

Autonomy Level |

Typical Use Cases |

Pros |

Cons |

|

Fixed Automation |

Performs pre-defined tasks with rigid logic |

🔹 Minimal |

Manufacturing, routine workflows |

Fast, predictable, low-cost |

No adaptability or learning |

|

LLM-Enhanced |

Uses large language models for flexible task execution |

🔸 Moderate |

Email summarization, content generation |

Natural language understanding, broader versatility |

Limited reasoning, no external memory |

|

ReAct / ReAct + RAG |

Combines reasoning & acting (ReAct) + retrieval from DBs |

🔸 Moderate–High |

Q&A, research tasks, code generation |

Context-aware, info-rich, iterative reasoning |

Can be slow; needs strong retrieval configuration |

|

Tool-Enhanced |

Accesses external tools, APIs, or databases |

🔸 Moderate–High |

Web scraping, automation, analytics |

Scales real-world utility, can fetch live data |

Tool calls may fail; more complexity to manage |

|

Self-Reflecting |

Evaluates own actions and learns from errors |

🔸 High |

Strategy agents, creative writing, multi-step logic |

Improves iteratively; useful for longer workflows |

Requires evaluation loops; can get stuck in reflection |

|

Environment Controllers |

Interacts with real or simulated environments |

🔹 High |

Games, robotics, smart homes |

Real-time decisions; supports feedback from surroundings |

Needs environment simulators; harder to test |

|

Self-Learning |

Learns from new data or experiences without retraining |

🔺 Very High |

Predictive agents, personalization systems |

Adaptive, data-driven improvements |

Hard to control outcomes; risks of model drift |

🔹 = Basic autonomy

🔸 = Intermediate autonomy

🔺 = Advanced autonomy

1) Define the Goal:

Clearly state what you want the agent to accomplish. This will guide the entire

development process.

2) Choose the Right

Model: Select the appropriate AI model for the task. This could be a large

language model (LLM) like GPT-4, a smaller, more specialized model, or a

combination of models.

3) Gather Data: Collect

and prepare the data the agent will need to learn and make decisions. This

could include documents, conversation histories, or data from external APIs.

4) Train the Model (if

necessary): For learning agents, you'll need to train the model on your data to

improve its performance on the specific task.

5) Develop the

Orchestration Layer: This is the logic that governs how the agent processes

information, plans, and executes actions. It connects the model to the tools

and data it needs.

6) Integrate Tools:

Provide the agent with the necessary tools to interact with the outside world.

This could include web search, file access, or connections to other software.

7) Deploy and Monitor:

Once built, deploy the agent and continuously monitor its performance to

identify areas for improvement.

2.1 10 Questions to ask before consider

an AI Agent

1)

What is the complexity of the task?

2)

How often does the task occur?

3)

What is the expected volume of data or queries?

4)

Does the task require adaptability?

5)

Can the task benefit from learning & evolving over time?

6)

What level of accuracy is required?

7)

Is human expertise or emotional intelligence essential?

8)

What are the privacy and security implications?

9)

What are the regulatory and compliance requirements?

10)

What is the cost-benefit analysis?

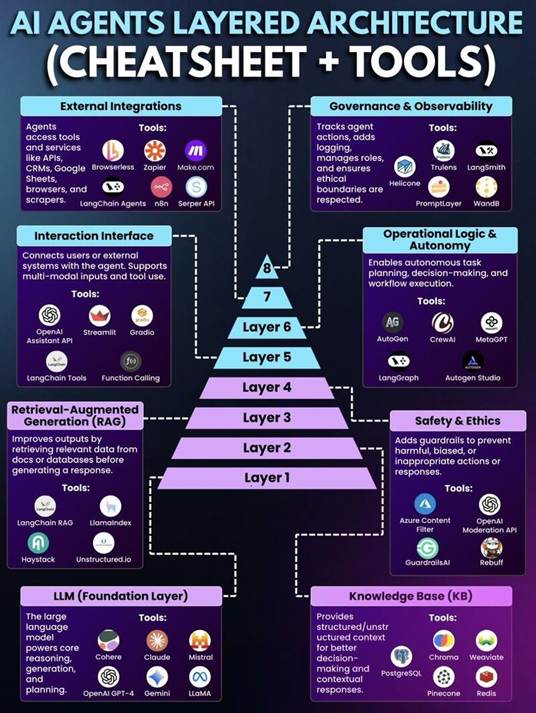

3.1 Layer 1: LLM (Foundation Layer):

OpenAI, Gemini, LLaMA, Claude

Purpose:

Core reasoning, generation, planning.

Tools:

GPT-4 (OpenAI), Claude, Cohere, Mistral, Gemini, LLaMA

3.2 Layer 2: Knowledge Base(KB), Vector

Database: PostgreSQL, Pinecone, Redis

Purpose:

Structured/unstructured context for better responses.

Tools:

Chroma, Weaviate, Pinecone, Redis, PostgreSQL

3.3 Layer 3: RAG

(Retrieval-Augmented-Generation): Langchain, Llamaindex,

Purpose:

Retrieves relevant data from docs/dbs before responding.

Tools:

LangChain RAG, LlamaIndex, Haystack, Unstructured.io

3.4 Layer 4: Safety & Ethics: Azure

Content Filter, OpenAI Moderation API

Purpose:

Adds guardrails to prevent bias, harm, or inappropriate behavior.

Tools:

Azure Content Filter, OpenAI Moderation API, GuardrailsAI, Rebuff

3.5 Layer 5: Operational Logic &

Autonomy: AutoGen, CrewAI, LangGraph, Autogen

Purpose:

Autonomous task planning, decision-making, and execution.

Tools:

AutoGen, CrewAI, MetaGPT, LangGraph, Autogen Studio

3.6 Layer 6: Interation Interface:

OpenAI Assistant API, Streamlit, Gradio

Purpose:

Connects users or systems with the agent (multimodal input/tool use).

Tools:

OpenAI Assistant API, Streamlit, Gradio, LangChain Tools, Function Calling

3.7 Layer 7: External Integrations:

Zapier, Make.com, LangChain Agents, n8n

Purpose:

Access tools/services like APIs, CRMs, browsers, scrapers.

Tools:

Browserless, Zapier, Make.com, Serper API, LangChain Agents, n8n

3.8 Layer 8: Governance &

Observability

Purpose:

Tracks actions, logging, roles, ethics, boundaries.

Tools:

Helicone, Promptlayer, Trulens, LangSmith, WandB

AI Agent Frameworks

Comparison Table

|

Framework |

Core Functionality |

Best For |

Strengths |

Limitations |

|

LangChain |

Modular orchestration of LLM agents & tools |

Custom agent pipelines, chains |

Rich ecosystem, tool integration, community support |

Can be complex to configure for large workflows |

|

DeepLake |

Data lake optimized for ML and embeddings |

Storing embeddings, training data |

Fast vector search, scalable, versioned datasets |

Not focused on agent logic or orchestration |

|

AutoGPT |

Autonomous agent that breaks down tasks recursively |

Task automation with minimal setup |

Easy to use, popular, autonomous prompt chaining |

Less control, fragile tool usage, lacks granularity |

|

OpenAI Swarm |

Multi-agent coordination via OpenAI LLMs |

Parallel agent tasks, delegation |

Powerful for collaboration, scalable task resolution |

Limited documentation, experimental features |

|

Prodigy |

Active learning annotation tool |

Human-in-the-loop training workflows |

Real-time annotation, supports NLP and computer vision |

Focused on dataset labeling, not agent execution |

|

LlamaIndex |

Indexing and querying for agent memory & RAG |

Long-term memory, document retrieval |

Fast context access, structured doc ingestion |

Not an agent runtime, needs pairing with orchestration |

|

AutoGen |

Multi-agent workflow engine from Microsoft |

Role-based agents and tool usage |

Chat-based coordination, extensible, open source |

Requires planning; limited examples for complex agents |

|

Meta AgentKit |

Metamodel control and orchestration |

Agent switching, multi-model tasks |

Model flexibility, advanced routing |

Early-stage, may need custom tuning for workflows |

|

Vertex AI Agent Builder |

Google’s agent creation platform in Vertex AI |

Cloud-native agent deployment |

Seamless GCP integration, low-code options |

Tied to Google Cloud, less flexible than open frameworks |

5 Evaluation, Problem, and Solution

5.1 Metrics for Evaluating AI Agent

-

Latency & Speed → Tool call latency, task duration

-

API Efficiency → Call frequency, token usage

-

Cost & Resource → Task cost, context usage

-

Error Rate → LLM call failures

-

Task Success → Task completion rate

-

Human Input → Steps per task, human help needed

-

Instruction Match → Follows human instructions

-

Output Format → Format and context accuracy

-

Tool Use → Tool selection, arguments, success

5.2 Development Issues & Fixes

Poor prompts

→

Define objectives

→

Craft detailed personas

→

Use effective prompting models

Weak evaluation

→

Real-world tasks

→

Continuous evaluation

→

Feedback loops

-

Hard to steer → Specialized prompts, hierarchy, fine-tuning

-

Too expensive → Reduce context, use smaller/cloud models

-

Planning fails → Decompose tasks, multi-agent systems

-

Weak reasoning → Improve reasoning, fine-tune, use specialist agents

-

Tool errors → Set parameters, validate outputs, add verification

-

No guardrails → Rule filters, human oversight, ethics frameworks

-

Scaling limits → Scalable infra, resource control, performance tracking

-

No recovery → Add redundancy, automate failover, detect failures

-

Infinite loops → Define stop rules, smarter planning, monitor behavior