Generative AI: Large Language

Model

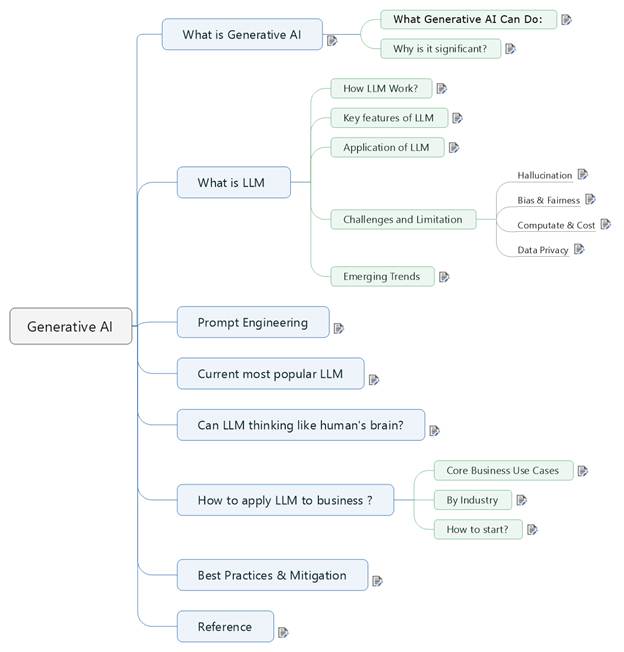

1 What is Generative AI.................................................................................................................. 2

1.1 What Generative AI Can Do:................................................................................................ 2

1.2 Why is it significant?............................................................................................................. 3

2 What is LLM................................................................................................................................ 4

2.1 How LLM Work?.................................................................................................................. 4

2.2 Key features of LLM............................................................................................................. 6

2.3 Application of LLM.............................................................................................................. 7

2.4 Challenges and Limitation.................................................................................................... 7

2.4.1 Hallucination.................................................................................................................. 7

2.4.2 Bias & Fairness.............................................................................................................. 7

2.4.3 Computate & Cost.......................................................................................................... 7

2.4.4 Data Privacy................................................................................................................... 7

2.5 Emerging Trends................................................................................................................... 7

3 Prompt Engineering...................................................................................................................... 8

4 Current most popular LLM.......................................................................................................... 9

5 Can LLM thinking like human's

brain?..................................................................................... 10

6 How to apply LLM to business ?............................................................................................... 11

6.1 Core Business Use Cases..................................................................................................... 11

6.2 By Industry.......................................................................................................................... 12

6.3 How to start?....................................................................................................................... 12

7 Best Practices & Mitigation....................................................................................................... 13

8 Reference.................................................................................................................................... 16

Generative AI is a fascinating and rapidly evolving subfield of

artificial intelligence that focuses on creating new, original content rather

than just analyzing or classifying existing data.

1.1 What Generative AI Can Do:

Generative AI models are capable of producing a

wide range of content, including:

Text: Writing articles, stories, poems, emails, code, scripts,

summaries, and even engaging in human-like conversations (e.g., ChatGPT,

Gemini).

Images: Generating realistic images from text descriptions, modifying

existing images, creating unique artwork, and even designing new products

(e.g., DALL-E, Midjourney, Stable Diffusion).

Audio: Composing music, synthesizing natural-sounding speech for voice

assistants, or generating sound effects.

Video: Creating animations from text prompts or applying special effects

to existing video.

Code: Generating original software code, autocompleting code snippets,

and translating between programming languages.

3D Models and

Simulations: Designing new objects,

environments, or even simulating complex systems like molecular structures for

drug discovery.

Generative AI is a game-changer because it moves

AI beyond analysis and prediction into the realm of creation. This opens up a

vast array of applications across industries, from automating content creation

and boosting creativity to accelerating scientific discovery and improving

customer experiences. It's a powerful tool that can augment human capabilities

and transform how we interact with technology.

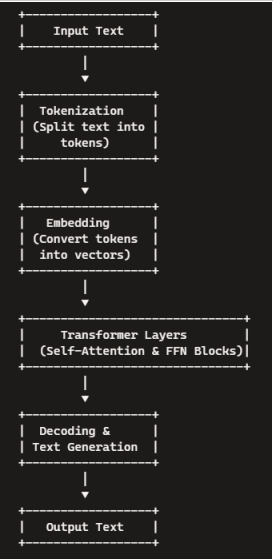

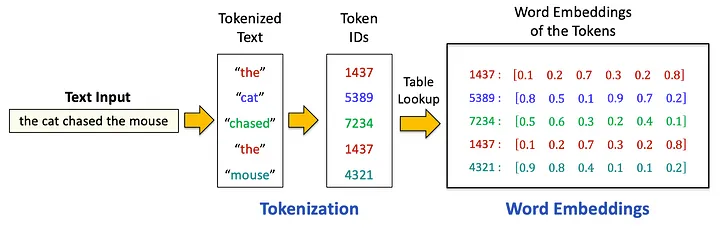

1. Input Text: the process starts with raw text data

2. Tokenization: The text is split into smaller units called tokens, which can

be words or subword pieces.

- Why It Matters: LLMs process tokens, not raw text. Tokens can represent

words, subwords, or characters, depending on the

tokenizer.

3. Embedding: Each token is

transformed into a numerical vector that captures semantic properties

- Example: Similar words like "king" and "queen" will have

embeddings close to each other in vector space.

- Why It Matters: Embeddings allow the model

to understand relationships between words.

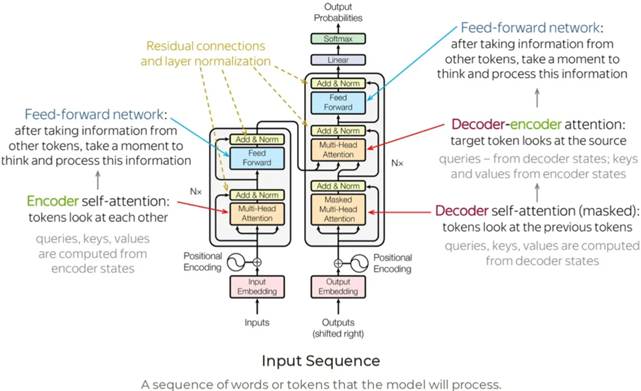

4. Transformer Layers: Stacked transformer layers, built around self-attention and

feed-forward networks, process these vectors to understand context and

relationships between tokens

- Core Idea: Transformers are the backbone of LLMs. They use mechanisms like

self-attention to process input efficiently.

Key Components:

- Encoder-Decoder: Used in models like BERT

(encoder-only) and GPT (decoder-only).

- Self-Attention: Helps the model focus on

relevant parts of the input.

- Feedforward Layers: Process the attention

outputs to make predictions.

5. Decoding & Text

Generation: Using the learned

patterns, the model generates output text based on various decoding strategies

(e.g., greedy decoding, beam search).

6. Output Text: Finally, the generated tokens are converted back into

human-readable text

Context Awareness

- LLMs can understand the context of a sentence

or paragraph, enabling coherent responses.

Few-Shot and Zero-Shot

Learning

- Few-Shot: The model learns tasks with minimal

examples.

- Zero-Shot: The model performs tasks without

prior examples.

Scalability

- Larger models (e.g., GPT-4) with billions of

parameters perform better but require more computational resources.

- Conversational Agents: Chatbots,

virtual assistants

- Text Generation: Articles, marketing

copy, creative writing

- Code Generation & Completion: From

snippets to full functions

- Question Answering & Summarization:

From documents, customer tickets

- Translation & Paraphrasing:

Crossing language barriers, clarifying meaning

Confidently generating false or nonsensical

facts.

Reflecting or amplifying societal biases in training

data.

2.4.3 Computate & Cost

Billions of parameters demand heavy GPUs,

energy, and budget.

Risk of memorizing and regurgitating sensitive

data.

- Multimodal LLMs: Merging text, vision,

audio (e.g., GPT-4’s image understanding).

- Sparse & Mixture-of-Experts:

Activating only parts of the model per input to scale efficiently.

- Continual Learning: Updating models

incrementally without forgetting past knowledge.

- On-Device & Edge LLMs: Tiny LLMs

optimized for smartphones and embedded devices.

|

Category |

Tips |

Examples |

|

Clear Objective |

State your goal

explicitly |

“Summarize this

article in 3 bullet points.” |

|

Prompt Structure |

Use system/user roles;

separate context |

System: “You are an

expert historian.” User: “Describe WWII.” |

|

Context Provision |

Give relevant

background or data |

“Here is sales data:

[table]. Analyze Q1 vs. Q2 trends.” |

|

Formatting Guides |

Specify output format |

“Return JSON with

‘title’ and ‘summary’ fields.” |

|

Temperature |

Control creativity

(0–1 scale) |

“Use temperature=0.2

for factual output.” |

|

Max Tokens |

Limit length of

response |

“Limit to 150 tokens.” |

|

Few-Shot Prompting |

Provide examples for

format consistency |

“Q: What’s the capital

of France? A: Paris. Q: Spain? A:” |

|

Chain-of-Thought |

Encourage stepwise

reasoning |

“Think step by step to

solve this math problem.” |

|

Role Play |

Assign persona to influence

style |

“You’re a marketing

guru. Draft an email campaign.” |

|

Error Handling |

Ask for self-checks or

clarifications |

“If any data is

missing, ask for more info before proceeding.” |

|

Follow-Up Prompts |

Plan for iterative

refinement |

“Now expand on point 2

with more technical details.” |

|

Common Pitfalls |

Avoid ambiguity; watch

pronoun references |

“Don’t use ‘it’

without clear antecedent.” |

Here’s a side-by-side comparison of today’s leading LLMs based on

Artificial Analysis benchmarks:

|

Model |

Provider |

Context Window |

Intelligence Index |

Price (USD/1M tokens) |

Output Speed (tokens/s) |

Latency to 1st Chunk (s) |

|

o3-pro |

OpenAI |

200 K |

71 |

$35.00 |

23.3 |

106.9 |

|

Gemini 2.5 Pro |

Google DeepMind |

1 M |

70 |

$3.44 |

147.9 |

34.7 |

|

o3 |

OpenAI |

128 K |

70 |

$3.50 |

140.1 |

16.9 |

|

o4-mini (high) |

OpenAI |

200 K |

70 |

$1.93 |

161.6 |

36.4 |

|

DeepSeek R1 0528 |

DeepSeek AI |

128 K |

68 |

$0.96 |

29.4 |

2.4 |

Key insights:

·

Highest “IQ”: o3-pro (71)

·

Biggest context: Gemini 2.5 Pro (1 M

tokens)

·

Best throughput: o4-mini (161.6 t/s)

·

Lowest latency: DeepSeek R1 (2.4 s)

·

Cheapest at scale: DeepSeek R1 and

o4-mini

Beyond these headline

metrics, you might weigh:

·

Agentic tool-use (e.g.

web browsing, code exec)

·

Multimodal handling

(vision + language)

·

Fine-tuning &

embedding support

5 Can LLM thinking like human's

brain?

LLMs do not actually think like a human brain.

✅ How LLMs work:

LLMs are trained to predict the most likely next word in a

sequence of text, based on patterns in huge amounts of data.

They use mathematical transformations (like attention mechanisms

in transformers) to create representations of text.

They don’t have consciousness, emotions, or real understanding of

the world.

✅ How human brains work:

Your brain combines memory, perception, emotion, intuition, and

reasoning.

You have self-awareness and an internal subjective experience

(what philosophers call qualia).

You can form intentions, set goals, and interpret meaning beyond

patterns.

✅ What LLMs can do that resembles thinking:

They can generate answers, summarize, reason through logical steps

(to an extent), and simulate conversation.

This mimics some aspects of language-based reasoning.

But it’s fundamentally pattern recognition, not true

comprehension.

In short:

LLMs simulate aspects of human-like language output but they don’t

think or understand the way your brain does. They are sophisticated statistical

tools, not minds.

6 How to apply LLM to business ?

1. Customer Support

*

Chatbots that handle FAQs, complaints, and returns.

*

Auto-generate personalized email responses.

*

Tools: GPT-based assistants, Zendesk integrations.

2. Marketing & Sales

* Write

ads, product descriptions, landing pages.

*

Generate email campaigns tailored to segments.

*

Analyze customer sentiment from social media.

3. Operations & Productivity

*

Automate document generation (contracts, reports, invoices).

*

Summarize meetings, extract action items (e.g. Zoom + AI tools).

*

Translate or localize content instantly.

4. Data & Analytics

*

Natural language interfaces to databases (“Ask your data” tools).

*

Generate insights from reports or dashboards.

*

Simplify analytics for non-technical users.

5. Product Development

*

Brainstorm feature ideas or user stories.

*

Auto-generate code snippets or documentation.

*

AI-powered prototyping or UX writing.

E-commerce: Personalized recommendations, automated product tagging, dynamic

customer service.

Finance: AI assistants for client communication, report generation, fraud

pattern explanation.

Healthcare: Auto-generate patient summaries, triage chatbot, simplify medical

notes.

Legal: Draft contracts, summarize legal documents, compare regulations.

Real Estate: Auto-write listings, chatbot for property Q\&A, summarize

offers.

1. Pick one workflow that’s time-consuming and

text-heavy.

2. Use tools like ChatGPT, Claude, or

open-source LLMs (e.g., LLaMA 3, Mistral).

3. Integrate via APIs or no-code platforms

(Zapier, Make, LangChain).

4. Test, fine-tune, and monitor for accuracy.

5. Scale to other departments.

1. Prompt Engineering & Input Control

• Frame questions clearly and consistently (e.g., label roles:

“User:” / “Assistant:”).

• Use few-shot or chain-of-thought exemplars to guide style and

reasoning.

• Validate and sanitize user inputs to prevent injection or

adversarial prompts.

• Lock down system prompts and enforce token-level policies on

forbidden content.

2. Retrieval-Augmented Generation (RAG)

• Ground answers in trusted knowledge bases (databases, vector

stores).

• Design hits-based retrieval: fetch top-k relevant docs, then let

LLM refine.

• Track source citations in outputs to boost transparency.

• Periodically refresh your index to avoid stale or outdated info.

3. Fine-Tuning & Parameter-Efficient Tuning

• Fully fine-tune only when you have abundant, high-quality

labeled data.

• Leverage adapters, LoRA, or prefix

tuning for domain-specific tweaks with minimal compute.

• Split data into train/validation/test to catch overfitting and

data leakage.

• Continuously evaluate on edge cases and rare scenarios.

4. Reinforcement Learning from Human Feedback (RLHF)

• Collect diverse human rankings on model outputs to train a

reward model.

• Use robust RL algorithms (e.g., PPO) with clipped updates to

prevent mode collapse.

• Rotate human annotators and audit reward signals for bias drift.

• Regularly re-calibrate with fresh feedback as your domain

evolves.

5. Bias & Fairness Mitigations

• Assess dataset demographics and perform subgroup performance

analysis.

• Apply data augmentation or re-sampling to balance

underrepresented groups.

• Use bias-detection tools (e.g., What-If Toolkit) and adversarial

bias tests.

• Deploy post-hoc debiasing: counterfactual data augmentation and

output filters.

6. Hallucination Detection & Grounding

• Integrate secondary verification: query external APIs or

fact-checkers.

• Set up confidence thresholds; route low-confidence queries to

safe fallbacks.

• Train a hallucination classifier on synthetic “made-up” vs.

“grounded” pairs.

• Log hallucination incidents and retrain/fine-tune to plug

knowledge gaps.

7. Output Moderation & Safety Filters

• Implement multi-stage filters: regex-based checks + ML

classifiers for toxicity, hate, PII.

• Throttle or quarantine outputs flagged as high-risk before user

delivery.

• Maintain an allow-list vs. block-list for sensitive topics

(medical, legal, financial).

• Continuously expand your filter taxonomy as new threat vectors

emerge.

8. Data Privacy & PII Handling

• Enforce input redaction: strip or hash personal identifiers

before inference.

• Avoid logging raw user content; store only anonymized embeddings

or metadata.

• Use on-device or private-cloud inference for regulated or

sensitive domains.

• Regularly audit data retention and compliance with GDPR, CCPA,

HIPAA, etc.

9. Security & Access Control

• Authenticate and authorize all model calls via API keys or OAuth

scopes.

• Isolate high-privilege models in segmented VPCs with strict

ingress/egress rules.

• Monitor for abnormal usage patterns (spikes, unusual queries)

and auto-throttle.

• Pen-test your prompt-to-API pipeline for injection attacks and

privilege escalation.

10. Monitoring, Logging & Metrics

• Track quality metrics: perplexity, BLEU/CIDEr

for generation tasks.

• Instrument production with latency, error rates, token

consumption dashboards.

• Set up alerts on drift: sudden drops in accuracy or spikes in

hallucinations.

• Use A/B testing to compare model versions under real-world load.

11. Model Efficiency & Deployment

• Apply model distillation or quantization to shrink footprint and

reduce cost.

• Leverage sparse or MoE architectures

to activate only needed parameters.

• Cache frequent prompts and outputs to save compute cycles.

• Use auto-scaling inference clusters to match user demand.

12. Explainability & Transparency

• Provide users with traceable “rationale” sections or citation

links.

• Implement attention- or gradient-based saliency maps for

internal audits.

• Offer users logs of what data the model accessed (in RAG

setups).

• Publish model cards detailing training data, architecture, known

limitations.

https://medium.com/@lmpo/tokenization-and-word-embeddings-the-building-blocks-of-advanced-nlp-c203b78bfd07

https://medium.com/@asadali.syne/understanding-the-transformer-architecture-in-llm-e475453879fe