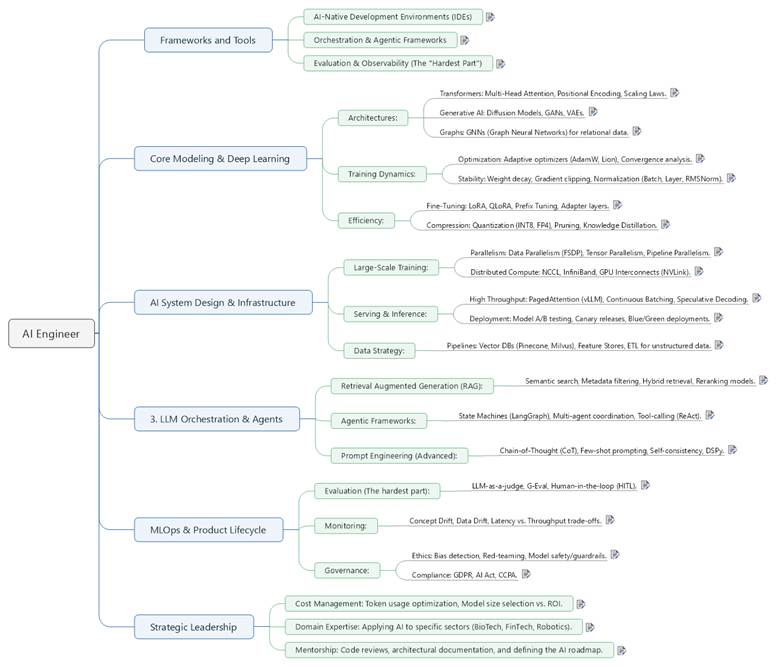

AI Engineer

1 Frameworks and Tools.................................................................................................................. 3

1.1 AI-Native Development Environments (IDEs)....................................................................... 3

1.2 Orchestration & Agentic Frameworks................................................................................... 5

1.3 Evaluation & Observability (The "Hardest Part").................................................................. 7

2 Core Modeling & Deep Learning................................................................................................ 10

2.1 Architectures:...................................................................................................................... 10

2.1.1 Transformers: Multi-Head Attention, Positional Encoding, Scaling Laws.................... 10

2.1.2 Generative AI: Diffusion Models, GANs, VAEs............................................................. 12

2.1.3 Graphs: GNNs (Graph Neural Networks) for relational data........................................ 14

2.2 Training Dynamics:.............................................................................................................. 16

2.2.1 Optimization: Adaptive optimizers (AdamW, Lion), Convergence analysis................. 16

2.2.2 Stability: Weight decay, Gradient clipping, Normalization (Batch, Layer, RMSNorm). 18

2.3 Efficiency:............................................................................................................................ 20

2.3.1 Fine-Tuning: LoRA, QLoRA, Prefix Tuning, Adapter layers........................................... 20

2.3.2 Compression: Quantization (INT8, FP4), Pruning, Knowledge Distillation.................. 22

3 AI System Design & Infrastructure............................................................................................. 25

3.1 Large-Scale Training:............................................................................................................ 25

3.1.1 Parallelism: Data Parallelism (FSDP), Tensor Parallelism, Pipeline Parallelism............ 25

3.1.2 Distributed Compute: NCCL, InfiniBand, GPU Interconnects (NVLink)........................ 27

3.2 Serving & Inference:............................................................................................................ 29

3.2.1 High Throughput: PagedAttention (vLLM), Continuous Batching, Speculative Decoding. 29

3.2.2 Deployment: Model A/B testing, Canary releases, Blue/Green deployments............ 30

3.3 Data Strategy:...................................................................................................................... 32

3.3.1 Pipelines: Vector DBs (Pinecone, Milvus), Feature Stores, ETL for unstructured data. 32

4 3. LLM Orchestration & Agents.................................................................................................. 35

4.1 Retrieval Augmented Generation (RAG):............................................................................ 35

4.1.1 Semantic search, Metadata filtering, Hybrid retrieval, Reranking models.................. 35

4.2 Agentic Frameworks:........................................................................................................... 37

4.2.1 State Machines (LangGraph), Multi-agent coordination, Tool-calling (ReAct)............ 37

4.3 Prompt Engineering (Advanced):........................................................................................ 39

4.3.1 Chain-of-Thought (CoT), Few-shot prompting, Self-consistency, DSPy........................ 39

5 MLOps & Product Lifecycle......................................................................................................... 41

5.1 Evaluation (The hardest part):............................................................................................. 41

5.1.1 LLM-as-a-judge, G-Eval, Human-in-the-loop (HITL)..................................................... 41

5.2 Monitoring:......................................................................................................................... 43

5.2.1 Concept Drift, Data Drift, Latency vs. Throughput trade-offs...................................... 43

5.3 Governance:........................................................................................................................ 45

5.3.1 Ethics: Bias detection, Red-teaming, Model safety/guardrails.................................... 45

5.3.2 Compliance: GDPR, AI Act, CCPA.................................................................................. 47

6 Strategic Leadership................................................................................................................... 49

6.1 Cost Management: Token usage optimization, Model size selection vs. ROI..................... 49

6.2 Domain Expertise: Applying AI to specific sectors (BioTech, FinTech, Robotics)................ 51

6.3 Mentorship: Code reviews, architectural documentation, and defining the AI roadmap.. 52

1.1 AI-Native Development Environments (IDEs)

By 2026, the AI-Native IDE market has split into three distinct philosophies: Forks (VS Code-based but rebuilt), High-Performance Native (built from scratch for speed), and Deep Integrations (standard editors with agentic layers).

In-Depth Comparison Table (2026)

|

Feature |

Cursor |

Windsurf |

Zed |

GitHub Copilot |

|

Foundation |

VS Code Fork |

VS Code Fork |

Rust-Native (Scratch) |

VS Code Extension / VS 2026 |

|

Core AI Logic |

Agent Mode: Multi-cycle autonomous editing. |

Cascade: Flow-aware proactive planning. |

Inline/Multiplayer: Minimalist, high-speed AI. |

Copilot Chat: Deeply integrated assistant. |

|

Context Handling |

Full-repo vector indexing. |

Continuous "Flow" memory. |

Project-specific context. |

Workspace-wide awareness. |

|

Model Choice |

Multi-model (Claude, GPT, Gemini). |

Multi-model support. |

User-owned/Custom models. |

15+ models (largest selection). |

|

Performance |

Moderate (Electron-based). |

Moderate (Electron-based). |

Ultra-Fast (GPU-accelerated). |

Moderate (VS Code standard). |

|

Pricing |

Free tier; Pro $20/mo. |

Generous free tier; Pro option. |

Free & Open Source. |

Free (OSS/Students); $10–$39/mo. |

1. Cursor: The "Agentic" Leader

Cursor is currently the most popular choice for developers seeking an "AI-first" experience without leaving the VS Code ecosystem.

· Composer Mode: Allows the AI to create and refactor multiple files simultaneously from a single natural language prompt.

· Agent Mode (Ctrl+I): Uses a sophisticated multi-phase architecture to analyze the repo, plan, and execute tasks autonomously until completion.

· Best For: Senior and Full-stack engineers at startups who need high-speed, project-wide refactoring and complex task execution.

2. Windsurf: The "Context-First" Challenger

Created by Codeium, Windsurf competes directly with Cursor but emphasizes a different type of context retention.

· The Cascade: A unique agent that "just remembers" your intent across a session, understanding context across the terminal, editor, and browser.

· Flow Awareness: It tracks incomplete work states and narrates exactly what it is doing, which is helpful for larger, more complex codebases.

· Best For: Principal or Staff engineers working in massive, long-lived repositories where "not losing the thread" is critical.

3. Zed: The "High-Performance" Speedster

Zed is the choice for developers who prioritize raw editor performance and real-time collaboration over heavy AI automation.

· Rust-Native Performance: By using GPU-accelerated rendering, it eliminates the "sluggishness" often found in Electron-based editors like Cursor or VS Code.

· Multiplayer Coding: Allows teams to work together in real-time on the same file, similar to Google Docs, but with built-in AI assistance.

· Best For: Performance-focused developers who want a minimalist environment and frequently pair-program remotely.

4. GitHub Copilot & Visual Studio 2026

For many enterprises, staying with the standard tools is the safest and most compliant path.

· Deep Integration: In Visual Studio 2026, Copilot is "woven into" core experiences, providing profiling insights and debugging workflows directly.

· Governance: It offers enterprise-ready compliance and a predictable monthly cost, making it the default choice for large organizations.

· Best For: Team leads and ICs in GitHub-standardized organizations who prefer a trusted, stable default with extensive governance.

1.2 Orchestration & Agentic Frameworks

In 2026, the landscape of Orchestration and Agentic Frameworks has shifted from simple "prompt chaining" to complex Cognitive Architectures. Developers now choose frameworks based on whether they need role-based teamwork, deterministic graph control, or automated prompt optimization.

Detailed Comparison of Top Frameworks (2026)

|

Feature |

LangGraph |

CrewAI |

AutoGen |

DSPy |

|

Core Paradigm |

Graph-driven State Machine. |

Role-based Teams ("Crews"). |

Conversational Multi-agent. |

Programmatic Optimization. |

|

Control Logic |

Explicit: Direct control over nodes and edges. |

Implicit: High-level goal and role definitions. |

Dynamic: Agents "talk" to solve problems. |

Declarative: Contracts define inputs/outputs. |

|

Best For |

Enterprise pipelines with strict branching. |

Rapid prototyping of human-like workflows. |

Exploratory tasks and coding assistants. |

High-accuracy reasoning with auto-tuned prompts. |

|

Learning Curve |

Moderate–High (Requires graph theory). |

Low (Very intuitive). |

Moderate (Tricky versioning). |

Moderate (Focuses on Python code). |

|

Speed/Efficiency |

Fastest: Efficient state handling. |

Slower: Overhead from "autonomous deliberation". |

Moderate: High token usage for chat history. |

Ultra-Fast: Minimal framework overhead. |

1. LangGraph: The "Stateful" Architect

LangGraph is the preferred choice for production-grade systems requiring high reliability.

· Deterministic Flows: It treats workflows as nodes (actions) and edges (transitions), making it easy to build loops, retries, and conditional logic.

· State Management: It uses a centralized "AgentState" object that persists across steps, ensuring the agent never "forgets" where it is in a complex task.

· Observability: Deeply integrated with LangSmith for real-time tracing and debugging of every transition in the graph.

2. CrewAI: The "Role-Based" Manager

CrewAI mimics a human organizational structure, assigning agents specific "roles," "goals," and "backstories".

· Simple Orchestration: Instead of mapping out every step, you define what each agent is responsible for and let the framework handle delegation.

· Process Modes: Supports Sequential (Step A → Step B) or Hierarchical (Manager Agent oversees others) execution.

· Human-in-the-Loop: Built-in support for checkpoints where a human must approve an agent's work before it proceeds.

3. AutoGen: The "Conversational" Researcher

Developed by Microsoft, AutoGen frames agent interaction as a continuous dialogue.

· Conversational Logic: Agents solve problems by passing messages back and forth, making it excellent for brainstorming or peer-review tasks.

· Code-Native: Historically strong in autonomous coding, where one agent writes code and another executes/debugs it in a sandbox.

· Flexibility: Highly customizable at the tool level, allowing agents to act as ChatGPT-style assistants or specialized tool executors.

4. DSPy: The "Compiler" for Prompts

DSPy is not a traditional agent framework but a "programming model" that aims to replace manual prompt engineering.

· Programmatic Pipelines: You define a "Signature" (e.g., question -> answer) and a "Module" (e.g., ChainOfThought), and DSPy handles the rest.

· Automatic Optimizers: It can "compile" your pipeline, automatically searching for the best instructions and few-shot examples to maximize accuracy.

· Model Portability: If you switch from GPT-4 to Llama 4, you don't rewrite prompts; you simply re-run the optimizer to find the best prompt for the new model.

Strategic Recommendation for 2026

· For Complex Enterprise Workflows: Use LangGraph. Its 2.2x speed advantage over autonomous frameworks and strict state control are vital for scale.

· For Rapid Business Prototyping: Use CrewAI. It is the easiest to explain to non-technical stakeholders because it mirrors human departments.

· For Maximum Accuracy (RAG): Integrate DSPy into your pipeline. It has been shown to improve answer quality by up to 25% through systematic optimization.

1.3 Evaluation & Observability (The "Hardest Part")

In the AI lifecycle, Evaluation and Observability are often called "The Hardest Part" because, unlike traditional software, AI outputs are probabilistic and non-deterministic.1 You cannot simply check if output == "expected_string".

Instead, you must measure "vibe" via metrics, trace complex multi-step reasoning, and detect "silent failures" like hallucinations or drift.2

Detailed Comparison: AI Evaluation & Observability (2026)

|

Feature |

Arize AI (Ax/Phoenix) |

Maxim AI |

DeepEval |

LangSmith |

|

Core Philosophy |

Production Reliability & ML Health. |

Full-Stack Quality & Agent Simulation. |

"Pytest for LLMs" (Code-Driven). |

Debugging & Trace Visualization. |

|

Best For... |

Large-scale enterprise monitoring. |

Multi-agent systems & PM/Eng collab. |

CI/CD automation & unit testing. |

LangChain/LangGraph users. |

|

Simulation |

Basic playback. |

Advanced: User persona & scenario simulation. |

Synthetic dataset generation. |

Dataset-driven backtesting. |

|

Tracing Depth |

High: Built on OpenTelemetry (OTEL). |

Highest: Node-level agent trajectory tracing. |

Local/Prod tracing via @observe. |

Visual: Best-in-class call graph explorer. |

|

Primary Metrics |

Drift, Bias, & Accuracy. |

Task completion & Reasoning quality. |

50+ Research-backed (G-Eval, etc.). |

Semantic similarity & Safety. |

|

Hosting |

Cloud + Managed Private Cloud. |

Cloud + Self-hosted. |

Open Source + Confident AI (Cloud). |

Managed (SaaS) only. |

1. Arize AI (Phoenix / Ax): The Production Guardian

Arize is designed for teams that prioritize long-term stability and enterprise-grade governance.3

· Drift & Bias Detection: Unlike pure LLM tools, Arize excels at spotting when a model's performance decays over months or begins showing demographic bias.4

· Arize Phoenix (OSS): A local-first version that provides instant OTEL-compliant tracing, which can be seamlessly "upgraded" to the enterprise platform.

· The Logic: It treats AI as a living system that needs a "heart rate monitor" in production.

2. Maxim AI: The Agent "Flight Simulator"

Maxim has carved out a niche as the most comprehensive platform for multi-agent reliability.5

· Simulation Engine: Before you deploy, Maxim lets you run your agent against hundreds of simulated user personas (e.g., "The Angry Customer" or "The Expert Researcher") to find edge-case failures.6

· Node-Level Debugging: If a 10-step agent loop fails at Step 7, Maxim pinpoints exactly which tool call or reasoning step caused the cascade.7

· The Logic: It bridges the gap between engineering (who build) and product (who evaluate) in a single platform.8

3. DeepEval: The Developer's Unit Tester9

DeepEval is for engineers who want their AI tests to look exactly like their standard Python tests.10

· "Pytest for LLMs": It allows you to write assertions like assert_hallucination_score(output, context) < 0.2 directly in your CI/CD pipeline.

· Synthetic Data Generation: If you don't have a test set, DeepEval can "evolve" a handful of documents into thousands of challenging test cases automatically.

· The Logic: It assumes that the best place to fix AI quality is in the pull request phase, not just in production.

4. LangSmith: The "Black Box" Opener

LangSmith remains the gold standard for debugging complex chains, especially those built with LangChain or LangGraph.11

· Trace Exploration: Its UI is unmatched for seeing exactly what was sent to the model, how many tokens were used, and what the raw JSON response was at every single layer.

· Prompt Playground: You can take a failed trace, pull the prompt into a playground, edit it, and "re-run" it instantly to see if your fix works.12

· The Logic: It is the ultimate "investigative tool" for when you know something is wrong but don't know where it's happening.

Summary Table: Which one should you pick?

|

If your priority is... |

Use this tool |

|

Enterprise Governance & Drift Monitoring |

Arize AI |

|

Complex Agents & User Simulation |

Maxim AI |

|

Fast CI/CD & Local Unit Testing |

DeepEval |

|

Visual Debugging & LangChain Ecosystem |

LangSmith |

2 Core Modeling & Deep Learning

2.1.1 Transformers: Multi-Head Attention, Positional Encoding, Scaling Laws.

1. Multi-Head Attention (MHA)

While standard self-attention allows a model to relate tokens, Multi-Head Attention allows the model to "look" at the input through multiple lenses simultaneously.

· The Mechanism: Instead of performing a single attention function with $d_{model}$-dimensional signals, we project the Queries ($Q$), Keys ($K$), and Values ($V$) $h$ times with different, learned linear projections.

· Parallel Subspaces: Each "head" explores a different representation subspace. One head might focus on grammatical structure (e.g., matching a verb to its subject), while another focuses on semantic relationships or coreference resolution (e.g., linking "he" to "John").

·

The Math:

$$MultiHead(Q, K, V) =

Concat(head_1, ..., head_h)W^O$$

where each $head_i =

Attention(QW_i^Q, KW_i^K, VW_i^V)$.

· Senior Insight: The computational cost of MHA is $O(n^2 \cdot d)$, where $n$ is sequence length. As a senior, you should be familiar with optimizations like Multi-Query Attention (MQA) (one $K$ and $V$ for all heads) or Grouped-Query Attention (GQA) (used in Llama 3) to reduce the memory bottleneck of the KV-cache during inference.

2. Positional Encoding (PE)

Because Transformers process all tokens in parallel (unlike RNNs), they are "permutation invariant"—they treat the sentence as a "bag of words" unless we explicitly tell them where each word is.

· Sinusoidal Encodings (Absolute): The original approach uses sine and cosine functions of different frequencies.

· The Benefit: It allows the model to easily learn to attend by relative positions, because for any fixed offset $k$, $PE_{pos+k}$ can be represented as a linear function of $PE_{pos}$.

· Rotary Positional Embeddings (RoPE): Most modern state-of-the-art models (like Llama 3/4 and PaLM 2) use RoPE.

· How it works: Instead of adding a vector to the embedding, RoPE rotates the $Q$ and $K$ vectors in a 2D space. The amount of rotation depends on the token's position, preserving relative distance through the angle between vectors.

· Senior Insight: Absolute PE (Sinusoidal) struggles to generalize beyond the maximum sequence length seen during training. RoPE and ALiBi (Attention with Linear Biases) are preferred for "Infinite Context" tasks because they handle unseen distances more gracefully.

3. Scaling Laws

Scaling laws provide the "science" of AI development. They predict how validation loss will decrease as you increase compute, parameters, or data.

· Kaplan vs. Chinchilla (Hoffmann et al.): * Kaplan (2020): Suggested that model size ($N$) is the most important factor—bigger is almost always better.

· Chinchilla (2022): Proved that most models were actually "undertrained." They found that for every doubling of compute ($C$), you should double both the number of parameters ($N$) and the number of training tokens ($D$) equally.

· The Optimal Ratio: The Chinchilla study found the "sweet spot" is roughly 20 tokens per parameter. (e.g., a 7B model should be trained on 140B tokens).

|

Concept |

Key Finding |

|

Compute-Optimal |

For a fixed budget, $N$ and $D$ should scale in a 1:1 ratio. |

|

Power Law |

Loss $\approx \frac{A}{N^\alpha} + \frac{B}{D^\beta} + L_0$ (Loss decreases predictably as $N$ or $D$ grow). |

|

Inference-Optimal |

In 2026, we often "overtrain" models (e.g., Llama 3 trained 8B parameters on 15T tokens) to make the small model smarter for cheaper inference. |

· Senior Insight: When choosing between a 70B model trained on 2T tokens or a 7B model trained on 20T tokens, a Senior Engineer considers the inference budget. If you are serving millions of users, the 7B "overtrained" model is vastly superior because it provides 70B-level intelligence at a fraction of the serving cost.

2.1.2 Generative AI: Diffusion Models, GANs, VAEs.

Generative AI has evolved from producing "blurry faces" to creating high-fidelity video and art. For a Senior AI Engineer, understanding these three architectures requires a look at their underlying probability densities and training stability.

1. Variational Autoencoders (VAEs)

VAEs are probabilistic graphical models that learn a compressed, continuous latent space.

· How it Works: Unlike a standard Autoencoder, the Encoder in a VAE doesn't output a single point in the latent space; it outputs parameters of a distribution (usually mean $\mu$ and variance $\sigma$). We sample from this distribution to feed the Decoder.

· The Loss Function: You must balance two terms in the ELBO (Evidence Lower Bound):

· Reconstruction Loss: Ensures the output looks like the input ($L2$ or Binary Cross-Entropy).

· KL-Divergence: A regularization term that forces the latent space to follow a standard normal distribution $\mathcal{N}(0, 1)$, ensuring "gaps" in the latent space are filled for smooth interpolation.

· Senior Insight: VAEs are prone to "Posterior Collapse," where the model ignores the latent code. They often produce blurry results because the $L2$ loss encourages the model to "average" possible pixel values rather than committing to sharp edges.

2. Generative Adversarial Networks (GANs)

GANs treat generation as a Zero-Sum Minimax Game between two competing neural networks.

· The Players:

· Generator ($G$): Learns to map random noise to realistic data.

· Discriminator ($D$): Learns to distinguish between real data and $G$'s "fakes."

· The Objective: $\min_G \max_D V(D, G)$. The Generator wins if the Discriminator is 50% sure (pure guessing).

· Senior Insight: GANs are notoriously hard to converge. You will encounter Mode Collapse, where the generator finds one specific "type" of output (e.g., a specific face) that always fools the discriminator and stops exploring the rest of the data distribution. Techniques like Wasserstein GAN (WGAN) use Earth Mover's distance to provide a more stable gradient.

3. Diffusion Models

Inspired by non-equilibrium thermodynamics, Diffusion models have become the "Gold Standard" in 2026 due to their training stability and diversity.

· Forward Process (Diffusion): Gradually adds Gaussian noise to an image over $T$ steps until it becomes pure noise. This is fixed, not learned.

· Reverse Process (Denoising): A U-Net or Transformer is trained to predict the noise added at each step and subtract it. By repeating this $T$ times, the model "unfolds" a coherent image from pure static.

· Senior Insight: The main drawback is Inference Latency. While a GAN generates an image in one forward pass, Diffusion takes 20–1000 steps. In production, we use Consistency Models or Distillation to reduce this to 1–4 steps for real-time applications.

Strategic Comparison for System Design

|

Feature |

VAE |

GAN |

Diffusion |

|

Output Quality |

Blurry / Low Fidelity |

High / Sharp |

Ultra-High / Photorealistic |

|

Diversity |

High (covers all modes) |

Low (Mode Collapse risk) |

High |

|

Training Stability |

Stable |

Unstable |

Stable |

|

Inference Speed |

Fast (Single pass) |

Fast (Single pass) |

Slow (Iterative) |

|

Latent Space |

Interpretive/Continuous |

Complex/Disentangled |

Indirect (Noisy Latents) |

The "Senior Decision" Matrix

· Use VAEs for anomaly detection or when you need a smooth latent space to interpolate between data points (e.g., drug discovery).

· Use GANs for real-time applications like video game texture upscaling or "Face Swap" filters where sub-second latency is non-negotiable.

· Use Diffusion for creative tools (text-to-image), high-quality synthetic data generation, or medical imaging where fidelity and diversity are more important than speed.

2.1.3 Graphs: GNNs (Graph Neural Networks) for relational data.

Graph Neural Networks (GNNs) are specifically engineered to handle data that doesn't fit into the "grid" structure of images (CNNs) or the "sequence" structure of text (Transformers). In relational data, the "geometry" is irregular—nodes can have any number of connections, and the global topology is often as important as the individual features.

1. The Core Mechanism: Message Passing

The fundamental operation of a GNN is Message Passing, which allows a node to "learn" its representation based on its neighbors.

· Step A: Aggregate: Each neighbor sends a "message" (its current feature vector). The node aggregates these using a permutation-invariant function (e.g., Sum, Mean, or Max).

· Step B: Update: The node combines its own previous state with the aggregated neighborhood message to produce a new hidden state.

$$h_i^{(l+1)} = \sigma \left( h_i^{(l)}, \text{AGGREGATE}(\{h_j^{(l)} : j \in \mathcal{N}(i)\}) \right)$$

2. Comparing Key GNN Architectures

A Senior AI Engineer chooses a GNN flavor based on the specific constraints of the graph (size, dynamism, and node relationships).

|

Architecture |

Key Innovation |

Best Use Case |

|

GCN (Graph Convolutional Net) |

Uses a normalized adjacency matrix to perform "spectral" smoothing. |

Transductive tasks (fixed graphs) where nodes are highly similar to their neighbors (homophily). |

|

GAT (Graph Attention Net) |

Applies Self-Attention to the edges. Instead of a fixed mean, it learns which neighbors are most important. |

Heterogeneous graphs where some relationships are "noisier" than others. |

|

GraphSAGE |

Inductive Learning. Instead of training on the full graph, it samples a fixed-size neighborhood for each node. |

Production-scale systems (like Pinterest or LinkedIn) where new nodes are added constantly and the full graph is too large for memory. |

3. Senior-Level Challenges: The "Bottlenecks"

When scaling GNNs in a production environment, you must navigate two primary theoretical hurdles:

· Oversmoothing: As you add more layers, the node representations tend to converge to the same value. After 3–5 layers, every node "looks" like the average of the whole graph.

· Solution: Use Skip Connections or Jumping Knowledge networks to preserve local info.

· Oversquashing: Attempting to squeeze information from an exponentially growing neighborhood (as you go deeper) into a fixed-size vector.

· Solution: Edge rewiring or using Graph Transformers for long-range dependencies.

4. GNNs for Relational Databases

In a traditional SQL database, "relational data" is stored in tables linked by Foreign Keys.

· Nodes: Represent rows in tables (e.g., Users, Products, Transactions).

· Edges: Represent the Foreign Key relationships.

· The Goal: Use GNNs to replace manual "Joins" and feature engineering. Instead of flatting the data, the GNN traverses the database schema directly to predict properties (e.g., "Is this transaction fraudulent based on the user's history and their connections' history?").

5. Practice & Implementation

For a production-grade GNN, you would typically use PyTorch Geometric (PyG) or DGL (Deep Graph Library). These libraries handle the sparse matrix multiplications efficiently on GPUs.

2.2.1 Optimization: Adaptive optimizers (AdamW, Lion), Convergence analysis.

As a Senior AI Engineer, you aren't just looking for "which optimizer is best," but rather understanding how weight decay interacts with adaptive learning rates and how memory constraints dictate the choice of optimizer in trillion-parameter regimes.

1. AdamW: Solving the Weight Decay Dilemma

Standard Adam implements $L_2$ regularization by adding the penalty to the gradient. For adaptive optimizers, this is problematic because the "effective" weight decay becomes dependent on the historical gradient magnitudes.

· The Problem: Parameters with large gradients get a smaller adaptive learning rate, which inadvertently scales down their weight decay. Parameters with small gradients get the opposite. This leads to inconsistent regularization.

· The Solution (AdamW): It decouples the weight decay from the gradient update. The decay is applied directly to the weights after the adaptive step is calculated.

·

The Math: 1. Update biased first/second moments ($m_t, v_t$).

2. Apply weight decay directly:

$$\theta_{t+1} = \theta_t - \eta \left(

\frac{\hat{m}_t}{\sqrt{\hat{v}_t} + \epsilon} + \lambda \theta_t \right)$$

where $\lambda$ is the weight decay coefficient and $\eta$ is

the learning rate.

2. Lion: EvoLved sIgn mOmeNtum

Discovered via Symbolic Program Search by Google, Lion is the de-facto choice for memory-constrained LLM training in 2025–2026.

· Key Innovation: It removes the second-moment ($v_t$) tracking entirely, which reduces optimizer memory by 50%. Instead of scaling by variance, it uses the sign operation to ensure every parameter update has the same magnitude.

·

Update Rule:

$$c_t = \text{interpolate}(g_t, m_{t-1}, \beta_1)$$

$$\theta_{t+1} = \theta_t - \eta (\text{sign}(c_t) + \lambda

\theta_t)$$

$$m_t = \text{interpolate}(g_t, m_{t-1}, \beta_2)$$

· Senior Insight: Lion requires a 3x–10x smaller learning rate than AdamW because the update norm is generally larger. It thrives on large batch sizes (e.g., 4096+), where the noise in the sign operation is averaged out effectively.

3. Convergence Analysis: Beyond L-Smoothness

For high-level roles, "convergence" isn't just a downward line on a chart. It's about the mathematical guarantees of the optimizer's path.

Traditional vs. Modern Assumptions

· L-Smoothness: Historically, we assumed the gradient doesn't change faster than a constant $L$. In deep networks, this is rarely true.

· (L0, L1)-Smoothness: Newer research assumes local smoothness grows with the gradient norm ($L_1 \|\nabla f(x)\| + L_0$). Adam is proven to converge more reliably under these conditions because its adaptive step size naturally "slows down" in sharp regions of the loss landscape.

Convergence States

|

Term |

Definition |

|

Almost Sure Convergence |

The probability that the sequence of weights reaches the global minimum is 1 as iterations $T \to \infty$. |

|

Convergence in Distribution |

The distribution of the weights settles into a stable state (used in Bayesian Neural Networks). |

|

Saddle Point Avoidance |

Second-order optimizers (like Sophia) or specific noise injections help "escape" plateau regions where the gradient is near zero but not at a minimum. |

Summary Comparison

|

Optimizer |

Memory State |

Regularization |

Batch Sensitivity |

|

AdamW |

$2 \times$ Parameters |

Decoupled Weight Decay |

Good for small to medium batches. |

|

Lion |

$1 \times$ Parameters |

Sign-based Regularization |

Best for large batches (high compute). |

|

SGD+M |

$1 \times$ Parameters |

Standard $L_2$ |

Highly sensitive to LR scheduling. |

2.2.2 Stability: Weight decay, Gradient clipping, Normalization (Batch, Layer, RMSNorm).

Training stability is the difference between a model that converges to a state-of-the-art result and one that "explodes" (NaN gradients) or "vanishes" (stops learning). For a Senior AI Engineer, these are the primary levers used to control the numerical health of a deep network.

1. Weight Decay (Regularization for Stability)

While often viewed as a tool to prevent overfitting, Weight Decay is a fundamental stability mechanism. It prevents the weights from growing indefinitely large during the optimization process.

· How it Works: It adds a penalty proportional to the magnitude of the weights to the loss function.

· The Stability Benefit: By keeping weights small, the model becomes less sensitive to small fluctuations in input data. In the context of AdamW, weight decay is decoupled from the gradient update to ensure that the "pull" towards zero is consistent regardless of the adaptive learning rate.

·

Mathematical Form:

$$\theta_{t+1} = \theta_t - \eta \nabla L(\theta_t) - \eta

\lambda \theta_t$$

where $\lambda$ is the weight decay coefficient. Without this,

weights in adaptive optimizers can "drift" into high-magnitude

regions where gradients become numerically unstable.

2. Gradient Clipping

In complex architectures like LLMs or RNNs, gradients can occasionally "explode," resulting in update steps so large they move the parameters into a region of the loss landscape from which the model cannot recover.

· Value Clipping: Caps the gradient at a specific threshold (e.g., if $g > 5$, $g = 5$).

· Norm Clipping (Senior Standard): Scales the entire gradient vector so its $L_2$ norm does not exceed a threshold. This preserves the direction of the gradient while limiting the step size.

· The Formula: If $\|\mathbf{g}\| > v$, then $\mathbf{g} \leftarrow \mathbf{g} \frac{v}{\|\mathbf{g}\|}$.

· Why it matters: It is essential during the "warm-up" phase of training when the loss landscape is very jagged.

3. The Normalization Trinity

Normalization layers stabilize the "Internal Covariate Shift"—the phenomenon where the distribution of inputs to a layer changes as previous layers update.

Batch Normalization (BN)

· Mechanism: Normalizes across the batch dimension.

· Senior Insight: While effective for CNNs, it is rarely used in LLMs because it depends on batch size. If the batch size is too small, the estimated mean and variance are noisy, leading to training instability. It also introduces a "dependency" between samples in a batch, which can be problematic for distributed training.

Layer Normalization (LN)

· Mechanism: Normalizes across the feature dimension for each sample independently.

· Senior Insight: This is the default for Transformers. Since it doesn't depend on other samples in the batch, it works identically during training and inference and is robust to varying sequence lengths.

· Formula: $\hat{x} = \frac{x - \mu}{\sqrt{\sigma^2 + \epsilon}} \cdot \gamma + \beta$

RMSNorm (Root Mean Square Layer Normalization)

· Mechanism: A simplified version of Layer Norm that only scales by the root mean square of the activations, omitting the mean-centering step.

·

Why Modern Models (Llama 3, Gopher) Use It: 1. Computational

Efficiency: It is roughly 10–40% faster than standard LN because it avoids

calculating the mean.

2. Invariance: It provides similar re-scaling properties to LN

but is more numerically stable in low-precision training (FP16/BF16).

· The Formula: $\bar{a}_i = \frac{a_i}{\sqrt{\frac{1}{n} \sum_{j=1}^n a_j^2}} \cdot g_i$

Summary Comparison for Architecture Design

|

Technique |

Where to Apply |

Primary Risk if Omitted |

|

Weight Decay |

Every layer (except Bias/LayerNorm scales). |

Weights grow to $\infty$, causing numerical overflow. |

|

Grad Clipping |

Global (applied to total gradient norm). |

Catastrophic "spikes" in loss; training collapse. |

|

Batch Norm |

Computer Vision (CNNs). |

Slow convergence; high sensitivity to initialization. |

|

Layer Norm |

Standard Transformers / RNNs. |

Exploding activations in deep stacks. |

|

RMSNorm |

High-performance LLMs (2025+). |

Higher latency and unnecessary mean calculation overhead. |

2.3.1 Fine-Tuning: LoRA, QLoRA, Prefix Tuning, Adapter layers.

In the landscape of 2026, Parameter-Efficient Fine-Tuning (PEFT) has shifted from a "nice-to-have" to a mandatory engineering requirement for deploying LLMs at scale. For a Senior AI Engineer, these techniques are about balancing VRAM constraints, inference latency, and task-specific performance.

1. LoRA (Low-Rank Adaptation)

LoRA is the industry standard because it offers the best balance of efficiency and performance without increasing inference latency.

· The Architecture: Instead of updating the full weight matrix $W \in \mathbb{R}^{d \times k}$, LoRA assumes the update $\Delta W$ has a low "intrinsic rank." It represents the update as the product of two small matrices, $A \in \mathbb{R}^{d \times r}$ and $B \in \mathbb{R}^{r \times k}$, where $r \ll d, k$.

·

The Math: During the forward pass, the operation becomes:

$$h = W_0x + \Delta Wx = W_0x + BAx$$

· Inference Efficiency: After training, you can "merge" $BA$ back into $W_0$ (i.e., $W_{new} = W_0 + BA$). This results in zero additional latency during inference.

· Senior Insight: Selecting the right $r$ (rank) and $\alpha$ (scaling factor) is critical. Usually, $r=8$ or $r=16$ is sufficient for most tasks. A higher $r$ doesn't always lead to better performance and can cause overfitting.

2. QLoRA (Quantized LoRA)

QLoRA is the breakthrough that allowed 70B+ parameter models to be fine-tuned on a single consumer GPU (e.g., RTX 4090).

· Key Innovations:

· 4-bit NormalFloat (NF4): A specialized data type that is information-theoretically optimal for normally distributed weights.

· Double Quantization: Quantizing the quantization constants themselves to save an additional 0.37 bits per parameter.

· Paged Optimizers: Uses NVIDIA unified memory to handle "VRAM spikes" by offloading optimizer states to CPU RAM when necessary.

· Senior Insight: QLoRA is essentially "free" memory savings with almost no accuracy drop (typically $<1\%$) compared to LoRA. However, training is slightly slower due to the constant de-quantization steps needed for the forward and backward passes.

3. Adapter Layers

The "original" PEFT technique, adapters involve inserting small, trainable modules between existing Transformer layers.

· Structure: A typical adapter is a bottleneck structure:

· Down-projection: Projects the dimension $d$ to a smaller bottleneck $r$.

· Non-linearity: (e.g., ReLU or GeLU).

· Up-projection: Projects back to dimension $d$.

· Residual connection: Adds the original input back to the output.

· The Trade-off: Unlike LoRA, adapters cannot be merged into the base weights. This means they add sequential layers to the model, which increases inference latency.

· Senior Insight: Adapters are superior when you need to store thousands of task-specific modules. Because they are modular, you can use AdapterFusion to combine multiple trained adapters (e.g., a "Sentiment" adapter + a "Legal Domain" adapter) dynamically.

4. Prefix Tuning

Prefix tuning is a step up from "Prompt Tuning." While prompt tuning only adds tokens to the input layer, Prefix Tuning adds trainable "prefix" vectors to every layer of the Transformer.

· The Mechanism: For each layer, a set of continuous, task-specific vectors (the "prefix") is prepended to the $K$ (Key) and $V$ (Value) matrices.

· The Math: Instead of attending only to the input tokens, the self-attention mechanism also attends to these "virtual tokens."

· Senior Insight: Prefix Tuning is highly effective for NLG (Natural Language Generation) tasks but can be more sensitive to initialization. It uses fewer parameters than LoRA but is harder to implement because it requires modifying the internal attention head logic of the model.

Quick Comparison Table

|

Technique |

Trainable Params |

Inference Overhead |

Latency |

Memory Savings |

|

LoRA |

Very Low ($<1\%$) |

None (Mergeable) |

Low |

High |

|

QLoRA |

Very Low ($<1\%$) |

None (after de-quant) |

Medium (Training) |

Extreme |

|

Adapters |

Low ($1-3\%$) |

Extra layers |

Increased |

High |

|

Prefix Tuning |

Ultra Low ($<0.1\%$) |

Extra KV-cache |

Low |

Very High |

Strategic Recommendation

· Production/High-Traffic: Use LoRA. The zero-latency overhead is vital for cost-effective serving.

· Budget/Single-GPU Training: Use QLoRA. It’s the only way to touch massive models like Llama-3-70B on 24GB VRAM.

· Multi-Task/Modular Apps: Use Adapters. They allow you to swap "skills" in and out without reloading the 100GB base model.

2.3.2 Compression: Quantization (INT8, FP4), Pruning, Knowledge Distillation.

Model compression is the process of reducing the size and computational requirements of a neural network while preserving as much of its original performance as possible. For a Senior AI Engineer, this is less about "shrinking files" and more about hardware-software co-design—optimizing how tensors interact with silicon.

1. Quantization: The Precision Trade-off

Quantization maps high-precision values (FP32/BF16) to lower-precision formats. In 2026, the industry has shifted toward Fine-Grained Low-Bit formats to balance accuracy with extreme hardware throughput.

· INT8 (8-bit Integer):

· Mechanism: Uses a linear mapping (Scale and Zero-point) to represent values as integers from -128 to 127.

· Senior Insight: SmoothQuant and Outlier-aware quantization are critical here. Because LLM weights often contain "outliers" (values much larger than the mean), standard per-tensor quantization fails. INT8 is now the "safe" baseline for near-lossless compression ($>99\%$ accuracy recovery).

· FP4 (4-bit Floating Point):

· The Modern Standard: Formats like NVFP4 (NVIDIA) and MXFP4 (Microscaling) are the new frontier.

· Technical Detail: Unlike INT4, FP4 uses a dedicated Exponent and Mantissa (e.g., E2M1 or E3M0). This allows the format to represent a wider dynamic range, making it significantly more effective at capturing the distribution of neural weights than linear integers.

· Hardware Co-design: FP4 requires specialized hardware (like NVIDIA Blackwell/Rubin architectures) to see real speedups.

2. Pruning: Removing Redundancy

Pruning identifies and removes "unimportant" parameters. It assumes that most deep networks are significantly over-parameterized.

· Unstructured Pruning (Weight Pruning):

· How it works: Individual weights with low magnitudes are set to zero.

· The Problem: This creates sparse matrices. Most standard GPUs are optimized for dense math; unless you have specialized sparse-tensor cores, unstructured pruning rarely improves inference speed—it only reduces storage size.

· Structured Pruning (Neurons/Channels/Layers):

· How it works: Removes entire architectural blocks (e.g., an entire Attention Head or a MLP layer).

· Senior Insight: This is the preferred method for production. It results in a smaller "dense" model that runs faster on any hardware. Modern techniques like LLM-Pruner use dependency graphs to ensure that removing one layer doesn't break the mathematical "flow" of the next.

3. Knowledge Distillation (KD)

Knowledge Distillation is a "Teacher-Student" training paradigm where a large, cumbersome model transfers its "dark knowledge" to a compact student.

· The Mechanism: The student doesn't just learn from "Hard Labels" (the ground truth); it learns from the Soft Labels (the probability distribution) of the teacher.

· Soft Targets: If a teacher is 90% sure an image is a "Labrador" and 10% sure it's a "Golden Retriever," that 10% tells the student about the similarity between the two classes.

· Advanced Techniques (2025-2026):

· Feature-Based Distillation: Aligning the intermediate hidden states of the student with the teacher using a projection layer.

· Reverse KL Divergence: Used in generative models to encourage "mode-seeking" behavior, ensuring the student produces precise, high-quality outputs rather than blurry averages.

· Multi-Teacher Distillation: Using multiple models (e.g., GPT-4 and Claude) to provide a more robust and less biased signal to a smaller open-source student.

Summary of Compression Strategies

|

Technique |

Primary Benefit |

Implementation Complexity |

Hardware Dependency |

|

Quantization |

2x–4x Memory Reduction |

Low (Post-Training) |

High (Needs specific FP4/INT8 cores) |

|

Pruning |

Reduced Flops / Latency |

High (Requires Fine-tuning) |

Low (for Structured) |

|

Distillation |

High Quality at Small Size |

Very High (Requires full training) |

None |

3 AI System Design & Infrastructure

3.1.1 Parallelism: Data Parallelism (FSDP), Tensor Parallelism, Pipeline Parallelism.

Since we've already covered the basics, this deeper dive focuses on the communication overhead, mathematical constraints, and 2026-standard architectures (like Context Parallelism) that a Senior AI Engineer must master to scale models to trillions of parameters.

1. FSDP & ZeRO-3: The Communication Trade-off

Fully Sharded Data Parallelism (FSDP) is the evolution of ZeRO-3. As a Senior Engineer, you must understand the Communication Collectives involved:

· The Mechanism: Instead of a single All-Reduce (used in standard DDP), FSDP breaks it into two distinct steps:

· Reduce-Scatter: Sums gradients across GPUs and shards them so each GPU only stores its portion.

· All-Gather: Before the forward/backward pass, GPUs broadcast their shards so everyone has the full weights temporarily.

· The Overhead: FSDP increases total communication volume by roughly 50% compared to standard DDP, but it reduces VRAM usage for weights/gradients by a factor of $N$ (number of GPUs).

· Senior Insight: In 2026, we use FSDP2, which allows for "granularity control"—sharding only the optimizer states (ZeRO-2) while keeping weights replicated if the interconnect (Ethernet vs. InfiniBand) is too slow for constant All-Gathers.

2. Tensor Parallelism (TP): Intra-Node Scaling

TP is used when a single layer's activations or weights exceed one GPU's VRAM.

· Column Parallel (Linear Layer 1): Splits the weight matrix $W$ vertically. Each GPU $i$ computes $XW_i$.

· Row Parallel (Linear Layer 2): Splits the weight matrix $W$ horizontally. Each GPU $i$ computes its partial result, and an All-Reduce is required at the end to sum them.

· The Bottleneck: TP requires an All-Reduce after every single layer.

· Math: Communication cost is $O(2 \times \text{layers} \times \text{hidden\_dim})$.

· Senior Insight: Because of this high frequency, TP is almost never used across nodes. It is strictly for GPUs connected via NVLink (900GB/s+) or NVSwitch.

3. Pipeline Parallelism (PP): The Bubble Math

PP is for "vertical" scaling. The primary metric for a Senior Engineer is the Pipeline Bubble (idle time).

· The Schedule: 1F1B (One Forward, One Backward) is the standard. It alternates forward and backward passes to keep memory usage constant.

·

The Bubble Equation: The fraction of time the pipeline sits idle

is:

$$\text{Bubble Time} = \frac{(D - 1)}{M}$$

where $D$ is the number of pipeline stages (GPUs) and $M$ is the

number of micro-batches.

· Senior Insight: To minimize the bubble, you want $M \gg D$. However, increasing $M$ increases the memory used by stored activations. To solve this, we now use Interleaved Pipelines, where each GPU handles multiple non-consecutive stages (e.g., GPU 1 handles layers 1-2 and layers 9-10).

4. 2026 Special: Context Parallelism (CP)

With the rise of "Million-Token Context," we now need to shard the Sequence Dimension itself.

· DeepSpeed-Ulysses: Shards the sequence across GPUs. Before the Attention step, it performs an All-to-All communication to gather all sequence tokens but only for a subset of Attention Heads.

· Pros: Extremely fast for models with many heads.

· Ring Attention: Nodes are arranged in a ring. They pass Key ($K$) and Value ($V$) blocks to their neighbors in a circle, computing attention locally as the data "travels."

· Pros: Allows for near-infinite context (10M+ tokens) because memory usage for attention stays constant regardless of sequence length.

Summary of Communication Collective Usage

|

Parallelism |

Primary Collective |

Network Requirement |

2026 Status |

|

FSDP |

Reduce-Scatter / All-Gather |

Medium (High-speed Eth) |

Universal standard for DP. |

|

Tensor (TP) |

All-Reduce |

Ultra-High (NVLink) |

Intra-node only. |

|

Pipeline (PP) |

P2P (Send/Recv) |

Low (Inter-node okay) |

Essential for >100B models. |

|

Context (CP) |

All-to-All / P2P Ring |

High (for Ulysses) |

Mandatory for long-context. |

3.1.2 Distributed Compute: NCCL, InfiniBand, GPU Interconnects (NVLink).

For a Senior AI Engineer, Distributed Compute is about removing the "tax" of moving data. When training models at scale, the time GPUs spend waiting for data from other GPUs is "dead time." These three technologies work together to minimize that latency and maximize throughput.

1. NVLink: The Intra-Node "Highway"

NVLink is a hardware-based, high-speed interconnect designed specifically for direct GPU-to-GPU communication within a single server (node).

· The Problem it Solves: Traditional PCIe slots are a bottleneck. Data moving from GPU A to GPU B via PCIe often has to travel through the CPU and system memory, causing massive latency.

· The Solution: NVLink provides a direct "bridge" between GPUs. Modern iterations (like NVLink 4.0/5.0) offer bidirectional bandwidth of up to 900 GB/s to 1.8 TB/s, which is over 10x faster than PCIe Gen5.

· Senior Insight: NVLink enables Memory Pooling. With a unified memory space, a 100B parameter model can "see" the VRAM of all 8 GPUs in a node as one giant bucket of memory, allowing for smoother Model Parallelism.

2. InfiniBand (IB): The Inter-Node "Fabric"

While NVLink handles communication inside a box, InfiniBand is the high-performance network protocol used to connect multiple boxes across a data center.

· RDMA (Remote Direct Memory Access): This is the "killer feature" of InfiniBand. It allows a GPU in Server 1 to read/write data directly into the memory of a GPU in Server 2 without involving either server's CPU or OS kernel.

· Low Latency: Unlike standard Ethernet, which is "lossy" and requires complex error-checking, InfiniBand is a credit-based, lossless fabric. Latency is often measured in nanoseconds, not milliseconds.

· Senior Insight: When designing a cluster for 1,000+ GPUs, you must choose a Fat-Tree or Torus topology for your InfiniBand switches to avoid "oversubscription"—ensuring that every node can talk to every other node at full wire speed simultaneously.

3. NCCL: The "Orchestrator" (Software)

NCCL (pronounced "Nickel") stands for the NVIDIA Collective Communications Library. It is the software layer that sits between your code (PyTorch/TensorFlow) and the hardware (NVLink/InfiniBand).

· Role: It provides optimized "Collective" operations. Instead of you writing code to manually move data, you call all_reduce, broadcast, or all_gather.

· Topology Awareness: NCCL is "smart." It detects the hardware environment. If it sees NVLink, it uses it. If it needs to go to another node, it automatically routes traffic through the InfiniBand NICs.

· The Math of All-Reduce: NCCL uses algorithms like Ring-Reduce or Tree-Reduce to ensure that as you add more GPUs, the communication time doesn't grow exponentially.

Summary: The "Data Path" for a Gradient Update

Imagine you are training an LLM across 2 servers, each with 8 GPUs:

· Intra-Node: The 8 GPUs inside Server 1 use NVLink and NCCL to sum their gradients locally.

· Inter-Node: Server 1 sends its summed gradients to Server 2 over the InfiniBand network using RDMA.

· Synchronization: NCCL ensures all 16 GPUs now have the exact same updated weights before the next training step begins.

|

Technology |

Layer |

Scope |

Primary Benefit |

|

NVLink |

Hardware |

Inside one server |

Extreme speed; eliminates PCIe bottleneck. |

|

InfiniBand |

Network |

Across servers |

Scalability; zero-copy data transfer (RDMA). |

|

NCCL |

Software |

Global |

Optimizes communication patterns (All-Reduce). |

3.2.1 High Throughput: PagedAttention (vLLM), Continuous Batching, Speculative Decoding.

In the AI landscape of 2026, the transition from "model research" to "inference at scale" has made these three technologies the industry standard. They solve the memory wall and the autoregressive bottleneck that previously made LLMs slow and expensive to serve.

1. PagedAttention (vLLM): The "Virtual Memory" of AI

Traditionally, the KV-Cache (which stores the model's "memory" of previous tokens) had to be stored in contiguous memory blocks. Because we don't know how long a response will be, we had to reserve huge chunks of VRAM "just in case," leading to 60-80% memory waste.

· How it Works: Inspired by Operating Systems, PagedAttention partitions the KV-Cache into small, non-contiguous blocks (pages).

· The Benefit: It eliminates fragmentation. Memory is only allocated as needed.

· Senior Insight: By reducing memory waste to less than 4%, PagedAttention allows you to fit 3x to 5x more requests on the same GPU, directly increasing throughput without changing the model.

2. Continuous Batching: Eliminating the "Sawtooth"

Traditional batching (Static Batching) waits for every request in a group to finish before starting a new batch. If one user asks for a 500-word essay and another for a 2-word "Hello," the GPU sits idle waiting for the essay to finish.

· How it Works: Also called Iteration-Level Scheduling, it admits new requests into the batch the moment a single token is generated or a request finishes.

· The Benefit: The GPU is never "waiting" for a batch boundary. It stays at near 100% utilization.

· Senior Insight: Continuous batching can improve throughput by 10x to 20x over static methods. It effectively "fills the gaps" in GPU compute cycles that were previously wasted.

3. Speculative Decoding: The "Draft and Verify" Loop

LLM inference is memory-bound, meaning it takes more time to load the model weights from memory than to actually do the math. Producing 5 tokens normally requires 5 full trips to the GPU memory.

·

How it Works: 1. A tiny, ultra-fast Draft Model (e.g., a 100M

parameter model) "guesses" the next 5-10 tokens.

2. The large Target Model (e.g., a 70B model) verifies all those

tokens in a single forward pass.

· The Benefit: If the draft model is right, you get 5 tokens for the "cost" of 1. If it's wrong, the target model simply corrects it and you lose nothing but a tiny bit of extra compute.

· Senior Insight: This is most effective in low-batch regimes (e.g., real-time chat). In high-throughput server environments, the extra compute for the draft model can sometimes compete with the main model, so it must be tuned carefully.

Comparison Table: Throughput vs. Latency

|

Technique |

Primary Goal |

Solves For... |

Best For... |

|

PagedAttention |

Throughput |

Memory Fragmentation |

Massive scaling / Multi-user |

|

Continuous Batching |

Throughput |

GPU Underutilization |

High-concurrency environments |

|

Speculative Decoding |

Latency |

Serial Bottleneck |

Real-time / Low-latency chat |

3.2.2 Deployment: Model A/B testing, Canary releases, Blue/Green deployments.

In the AI lifecycle, "Deployment" is where the model finally meets real-world data. As a Senior AI Engineer, you aren't just "pushing code"; you are managing the risk of model failure, data drift, and performance regressions.

The choice of strategy depends on whether your goal is experimentation (finding the best model) or stability (safely updating the model).

1. Model A/B Testing

Goal: Experimentation and statistical validation.

In A/B testing (or "Champion-Challenger"), you run two or more model versions in parallel to see which one performs better against a specific Business KPI (e.g., click-through rate, conversion).

· The Mechanism: A load balancer or "Router" randomly assigns a percentage of incoming traffic to Model A (the Champion) and the rest to Model B (the Challenger).

· Success Metric: Unlike training metrics (Accuracy/Loss), A/B testing focuses on Overall Evaluation Criteria (OEC).

· Senior Insight: A/B tests must run long enough to reach statistical significance. Beware of "peeking" at the results early; random noise often looks like a "winner" in the first 24 hours.

2. Canary Releases

Goal: Risk mitigation and "Blast Radius" control.

Named after the "canary in a coal mine," this strategy is used to catch critical failures (crashes, 500 errors, extreme latency) before they affect your entire user base.

· The Mechanism: You deploy the new model to a tiny subset of users (e.g., 1%). If the technical metrics (latency, error rate) stay healthy for a set period, you gradually "ramp up" to 5%, 20%, and finally 100%.

· The Guardrail: Automated rollbacks are essential. If the "canary" model triggers an alert (e.g., latency > 200ms), the system should automatically kill the canary and route 100% of traffic back to the stable version.

· Senior Insight: In 2026, we use Cell-based Canaries, where we roll out to specific regions or "cells" (e.g., just the "US-East" region) before going global.

3. Blue/Green Deployments

Goal: High availability and near-zero downtime.

This is a "switch-over" strategy that utilizes two identical production environments: Blue (the current live version) and Green (the new version).

· The Mechanism:

· Stage: You deploy the new model to the "Green" environment, which is currently idle.

· Test: You run smoke tests and "Warm up" the model (filling the KV-cache) in the Green environment.

· Switch: You flip the load balancer switch. 100% of traffic moves from Blue to Green instantly.

· The Advantage: If Green fails, you flip the switch back to Blue immediately. There is no "ramp-up" time; the rollback is instantaneous.

· Senior Insight: The main challenge is Cost and State. You are essentially paying for double the infrastructure. Additionally, you must ensure that your Database/Feature Store is compatible with both versions during the transition.

Comparison for System Design

|

Strategy |

Primary Driver |

Traffic Split |

Main Cost |

|

A/B Testing |

Data Science (Which is better?) |

Random (e.g., 50/50) |

High (Long duration) |

|

Canary |

SRE (Is it safe?) |

Incremental (1% $\to$ 100%) |

Low (Shared infra) |

|

Blue/Green |

Operations (Zero downtime) |

Binary (0 or 100%) |

High (Duplicate infra) |

The "Senior Stack" Workflow

In a high-maturity MLOps pipeline, you often combine these:

1. Shadow Deployment: Run the new model in parallel with the live one, logging its predictions but not showing them to users (0% impact).

2. Canary Release: Once Shadow results look safe, move to a 1% Canary to check real-world latency.

3. A/B Test: Once the Canary is stable at 10%, run a week-long A/B test to see if the new model actually improves business metrics.

4. Full Rollout: Cut over to 100%.

3.3.1 Pipelines: Vector DBs (Pinecone, Milvus), Feature Stores, ETL for unstructured data.

In a modern AI architecture, Data Pipelines are the nervous system that connects raw information to live models. For a Senior AI Engineer, this involves managing three distinct but overlapping components: Vector Databases for semantic memory, Feature Stores for model inputs, and ETL for turning "messy" unstructured data into intelligence.

1. Vector Databases: The "Semantic Memory"

Traditional databases (SQL/NoSQL) are built for exact matches (e.g., "Find user where ID = 123"). Vector Databases are built for Similarity Search (e.g., "Find images that look like this one" or "Find documents with this meaning").

· How it Works: They store Embeddings—high-dimensional numerical vectors generated by models like BERT or CLIP.

· Indexing Algorithms: To find the most similar vector among billions, they use ANN (Approximate Nearest Neighbor) algorithms like HNSW (Hierarchical Navigable Small Worlds) or IVF (Inverted File Index).

Pinecone vs. Milvus

|

Feature |

Pinecone |

Milvus |

|

Model |

Managed / Serverless (SaaS) |

Open-Source / Self-Hosted / Managed (Zilliz) |

|

Effort |

Zero-Ops: Just an API call. |

High: Requires managing clusters, shards, and GPU acceleration. |

|

Scaling |

Automatic scaling; usage-based pricing. |

Manual or auto-scaling; highly customizable for billions of vectors. |

|

Best For |

Fast prototyping and production RAG. |

Enterprise-scale, custom infrastructure, and high-performance GPU tasks. |

2. Feature Stores: The "Consistency Hub"

The biggest failure point in AI production is Train-Serve Skew: when the model uses one version of a feature for training but receives a slightly different version during live inference. Feature Stores solve this by centralizing the "Truth."

· Offline Store (The Library): Stores months or years of historical data for training (e.g., "What was this user's average spend over the last 30 days?").

· Online Store (The Cache): A low-latency database (like Redis or DynamoDB) that serves the freshest data for inference in milliseconds.

· Feature Registry: A catalog where engineers can discover, version, and share features across teams, preventing the "re-inventing the wheel" problem.

3. ETL for Unstructured Data: The "Bridge"

80–90% of enterprise data is unstructured (PDFs, emails, images, audio). Standard ETL (Extract, Transform, Load) pipelines fail here because they can't "read" the content. AI-driven ETL is the new standard.

· Extract: Pulling data from S3 buckets, APIs, or Slack channels.

· Transform (The AI Step):

1. OCR/Parsing: Turning PDFs or images into raw text.

2. Chunking: Breaking long documents into smaller, overlapping pieces (critical for RAG accuracy).

3. Enrichment: Using an LLM to extract metadata (e.g., "Sentiment: Positive," "Language: French," "Topic: Legal").

4. Embedding: Running the cleaned chunks through an embedding model to create vectors.

· Load: Pushing the vectors to a Vector DB and the metadata/features to a Feature Store.

Summary: Which one do you use?

|

Scenario |

Use This Tool... |

|

Building a Chatbot (RAG) |

Vector DB (Pinecone/Milvus) to store and retrieve document context. |

|

Predicting User Churn |

Feature Store to track user behavior consistently over time. |

|

Processing 10k Customer Emails |

AI ETL Pipeline to clean, chunk, and embed the text for analysis. |

The "Senior" Architecture Pattern

In 2026, the standard pipeline looks like this:

1. Raw Data lands in a Data Lake (S3/Azure Blob).

2. Orchestrator (Airflow/Dagster) triggers an AI ETL job.

3. Unstructured pieces go to Milvus (Vector DB).

4. Extracted metrics (e.g., word count, sentiment) go to Feast (Feature Store).

5. The LLM queries both at runtime to provide a context-aware, highly accurate response.

4 3. LLM Orchestration & Agents

4.1 Retrieval Augmented Generation (RAG):

4.1.1 Semantic search, Metadata filtering, Hybrid retrieval, Reranking models.

In the context of Retrieval-Augmented Generation (RAG), these four concepts represent the evolution from simple search to "intelligent retrieval." For a Senior AI Engineer, mastering these is the key to solving the "Retriever Problem"—the fact that if the wrong context is fetched, even the most powerful LLM will hallucinate.

1. Semantic Search

Semantic Search (or Dense Retrieval) uses deep learning to understand the "meaning" of a query rather than just looking for exact words.

· How it works: Text chunks and queries are transformed into Dense Vectors (Embeddings) using models like BERT or OpenAI's text-embedding-3.

· The Logic: In the vector space, the sentence "How to fix a flat tire" will be geographically close to "Replacing a punctured car wheel," even though they share no identical words.

· Senior Insight: While powerful, semantic search can struggle with specific acronyms or rare product IDs (e.g., "TX-900"). This is why it is rarely used in isolation in enterprise environments.

2. Metadata Filtering

Metadata filtering allows you to combine structured constraints (SQL-like) with unstructured semantic search.

5. Pre-filtering (The Industry Standard): You apply the filter before the vector search (e.g., "Search for 'return policy' ONLY in documents from 2024"). This guarantees that your top-K results satisfy the criteria.

6. Post-filtering: You perform a broad vector search first, then discard results that don't match the metadata.

· The Risk: If you fetch 10 items but only 1 matches the "2024" filter, your LLM will only receive 1 piece of context instead of the requested 10.

6. Senior Insight: In 2026, most vector DBs (Pinecone, Milvus) use HNSW with filtered traversal, which is more efficient than a full scan.

3. Hybrid Retrieval

Hybrid Retrieval combines the best of both worlds: BM25 (Keyword/Sparse Search) and Semantic Search (Dense Search).

· The Strategy:

1. Dense Search: Captures synonyms and general intent.

2. Sparse Search (BM25): Captures exact names, numbers, and technical terms.

·

Reciprocal Rank Fusion (RRF): This is the mathematical formula

used to merge the two ranked lists into one. It prioritizes documents that

appear high in both lists.

$$RRFscore(d) = \sum_{r \in R} \frac{1}{k + r(d)}$$

· Senior Insight: Hybrid retrieval is non-negotiable for RAG systems that need to be "Google-grade." It prevents the common failure where a semantic search misses a specific SKU or error code because it’s "too unique."

4. Reranking Models (The "Cross-Encoder")

Reranking is the final "polishing" step that significantly boosts precision.

· The Problem with Bi-Encoders: Standard vector search uses Bi-Encoders (the query and document are encoded separately). This is fast but lacks "attention" between the two.

· The Solution (Cross-Encoders): A reranker takes the Top 50–100 results from your hybrid search and passes the (Query, Document) pairs together through a transformer (like BGE-Reranker or Cohere Rerank).

· The Benefit: Because the model sees both the query and the document simultaneously, it can spot subtle contradictions or exact nuances that a bi-encoder missed.

· Senior Insight: Reranking adds latency. The engineering trade-off is typically: Fetch 100 with Hybrid (fast) → Rerank Top 10 (slower but high-precision) → Pass Top 5 to LLM.

Summary of the "Modern Retrieval" Stack

|

Stage |

Process |

Component |

Goal |

|

1. Filtering |

Pre-filter metadata |

Vector DB Metadata |

Narrow the "Search Space" |

|

2. Retrieval |

Hybrid (BM25 + Dense) |

Elasticsearch/Milvus |

Maximize Recall (Find all candidates) |

|

3. Refining |

Cross-Encoder Rerank |

Cohere/BGE |

Maximize Precision (Find the best) |

|

4. Generation |

Context + Prompt |

GPT-4 / Claude 3.5 |

Generate the Answer |

4.2.1 State Machines (LangGraph), Multi-agent coordination, Tool-calling (ReAct).

In the landscape of 2026, building AI systems has shifted from simple "prompting" to building Cognitive Architectures. These three concepts are the foundation of how we create agents that don't just "talk," but actually "do" work reliably.

1. State Machines (LangGraph)

In early AI development, we used "Chains" (linear sequences). However, real-world tasks are rarely linear; they require loops, retries, and conditional logic. LangGraph uses a State Machine (specifically a Directed Cyclic Graph) to solve this.

· The State (The Memory): A centralized object (like a TypedDict) that stores the current progress, message history, and any data gathered by the agent. Every "node" in the graph can read from and update this state.

· Nodes (The Workers): Individual functions or LLM calls. One node might be a "Translator," while another is a "Code Executor."

· Edges (The Path): Connections between nodes.

1. Conditional Edges: The LLM decides where to go next based on the state. For example: "If the code has errors, go back to the Coder node; otherwise, go to the Reporter node."

· Cycles: Unlike basic chains, LangGraph allows the model to loop back to a previous step—critical for self-correction and iterative refinement.

2. Multi-Agent Coordination

When a task is too complex for one agent (e.g., "Write a technical blog post and verify the facts"), we use a team of specialized agents. Coordination is the "management style" we use to keep them in sync.

Sequential Handoff: Agent A finishes its task and passes the result to Agent B (e.g., Researcher $\rightarrow$ Writer).

Hierarchical (Supervisor/Manager): A central "Manager Agent" receives the user's request and delegates tasks to sub-agents (e.g., Coder, Designer, QA). The manager reviews their work before moving to the next step.

Joint/Concurrent Collaboration: Multiple agents work on the same shared state simultaneously. For example, three different agents might analyze a financial report from different perspectives (Legal, Risk, Profit) and then merge their insights.

3. Tool-Calling (ReAct)

ReAct stands for Reasoning + Acting. It is the standard logic loop that allows an LLM to use external tools (like a calculator, a search engine, or a database) in a transparent way.

· The ReAct Loop:

· Thought: The LLM reasons about the current state. "The user wants to know the stock price of NVDA. I don't have real-time data, so I should use the Google Search tool."

· Action: The LLM outputs a specific command to call a tool. Search(query="NVDA stock price").

· Observation: The system executes the tool and feeds the raw result back to the LLM. "NVDA is currently at $140.25."

· Repeat: The LLM processes the observation and either provides a final answer or performs another "Thought $\rightarrow$ Action" cycle.

· The Impact: ReAct drastically reduces hallucinations because the model is forced to ground its "thoughts" in factual "observations" from the real world.

Comparison for Senior Engineers

|

Feature |

State Machines (LangGraph) |

Multi-Agent Coordination |

Tool-Calling (ReAct) |

|

Primary Goal |

Reliability & Control |

Complexity Management |

Real-world Interaction |

|

Core Logic |

Pre-defined graph/flow |

Team delegation/handoff |

Recursive reasoning loop |

|

Best For... |

Business processes & workflows |

Large-scale modular projects |

General-purpose autonomous tasks |

4.3 Prompt Engineering (Advanced):

4.3.1 Chain-of-Thought (CoT), Few-shot prompting, Self-consistency, DSPy.

In the 2026 AI landscape, prompting has evolved from "creative writing" into a rigorous engineering discipline. For a Senior AI Engineer, these four techniques represent the shift from simple instructions to structured reasoning and programmatic optimization.

1. Chain-of-Thought (CoT)

Chain-of-Thought is the "Thinking Out Loud" protocol. It forces the model to generate intermediate reasoning steps before arriving at a final answer, which significantly boosts performance on multi-step logic and math.

Zero-Shot CoT: Adding the magic phrase "Let's think step by step" to a prompt. This triggers the model's internal reasoning logic without needing any prior examples.

Manual CoT: Providing a "worked-out" example where the reasoning process is explicitly shown.

The Benefit: It prevents "cascading errors" where a model jumps to a wrong conclusion because it didn't verify the intermediate logic.

Senior Insight: In 2026, many "reasoning models" (like the OpenAI o1-series or Claude 3.5 Sonnet) have CoT baked into their architecture, making explicit CoT instructions less necessary for basic tasks but critical for complex system debugging.

2. Few-Shot Prompting

Few-Shot Prompting is the "Learning by Example" method. You provide the model with a small number of input-output pairs (usually 1–5) to establish a pattern, tone, or format.

· When to use: Use this when you need a highly specific output format (e.g., JSON with specific keys) or when the task is subjective (e.g., "Summarize this in the style of a 1920s noir detective").

· The "Shot" Levels: * Zero-Shot: Instruction only.

· One-Shot: One example.

· Few-Shot: Multiple examples.

· Senior Insight: Avoid "over-shoting." Providing too many examples can lead to bias—the model might start copying the content of your examples rather than the structure. In 2026, RAG-based Few-Shotting (dynamically pulling relevant examples from a database) is the standard for enterprise-scale agents.

3. Self-Consistency

Self-Consistency is the "Democracy of Logic" approach. It is an advanced decoding strategy typically used alongside CoT for high-stakes reasoning.

·

The Process: 1. The model generates multiple (e.g., 5 or 10)

independent reasoning paths for the same question at a high temperature (to

ensure diversity).

2. The system looks at the final answers from all those paths.

3. The most frequent answer (the majority vote) is selected as

the final result.

· The Logic: If multiple different ways of thinking lead to the same answer, that answer is statistically much more likely to be correct.

· Senior Insight: This technique is expensive (it uses 10x the tokens) but is essential for mission-critical tasks like code generation, legal analysis, or medical diagnostics where a single "hallucinated" logic step could be catastrophic.

4. DSPy (Declarative Self-improving Language Programs)

DSPy is the "End of Prompt Engineering." Developed by Stanford, it is a framework that treats prompts like code weights that can be automatically optimized.

· The Shift: Instead of manually "fiddling" with the wording of a prompt, you define:

1. Signatures: What the input and output look like (e.g., question -> answer).

2. Modules: The technique to use (e.g., dspy.ChainOfThought).

3. Optimizers (Teleprompters): An algorithm that takes a few data examples and automatically writes the best possible prompt and selects the best few-shot examples for you.

· The Benefit: Portability. If you switch from GPT-4o to Llama-3, you don't need to rewrite your prompts. You just re-run the DSPy optimizer, and it generates new, model-specific prompts.

· Senior Insight: DSPy represents the transition from "AI Art" to "AI Software Engineering." For a Senior Engineer, this is the tool used to build maintainable, version-controlled AI pipelines that don't break when a model provider updates their API.

The "Senior" Decision Matrix

|

Task Complexity |

Strategy |

Primary Tool/Method |

|

Simple Extraction |

Zero-Shot |

Clear, specific instructions. |

|

Strict Formatting |

Few-Shot |

Provide 2–3 JSON/Markdown examples. |

|

Logical Reasoning |

CoT |

"Think step-by-step" or worked examples. |

|

High-Stakes Logic |

Self-Consistency |

Majority vote over 10+ CoT paths. |

|

Scalable Pipelines |

DSPy |

Signatures + Optimizers (Automated). |

5.1 Evaluation (The hardest part):

5.1.1 LLM-as-a-judge, G-Eval, Human-in-the-loop (HITL).

Evaluating the quality of LLM outputs is notoriously difficult because traditional metrics (like BLEU or ROUGE) only measure word-overlap and miss the "intelligence" of the response.